Multi-exposure image fusion based on tensor decomposition and convolution sparse representation

-

摘要:

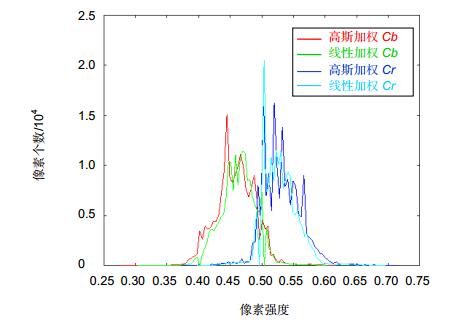

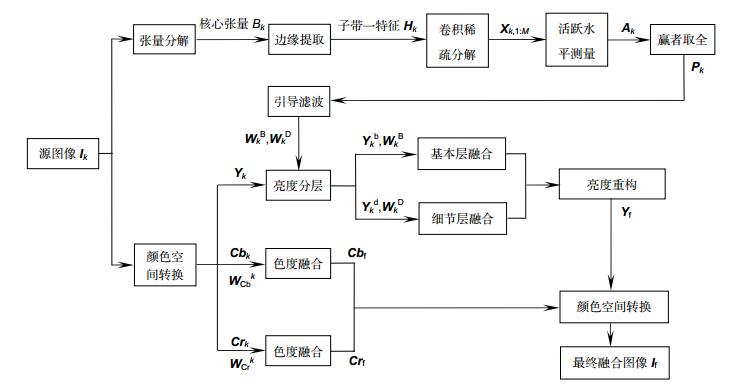

针对多曝光图像融合中存在细节丢失和颜色失真等问题,本文提出了一种基于张量分解和卷积稀疏表示的多曝光图像融合方法。张量分解作为一种对高维数据低秩逼近的方式,在多曝光图像特征提取方面有较大的潜力,而卷积稀疏表示是对整幅图像进行稀疏优化,能最大程度地保留图像的细节信息。同时,为了避免融合图像出现颜色失真,本文采取亮度与色度分别融合的方式。首先通过张量分解得到源图像的核心张量;然后在包含信息最多的第一子带上提取边缘特征;接着对边缘特征图进行卷积稀疏分解,继而利用分解系数的L1范数来得到每个像素的活跃水平;最后用"赢者取全"策略生成权重图,从而加权得到融合后的亮度分量。与亮度融合不同的是,色度分量则采用简单的高斯加权方式进行融合,在一定程度上解决了融合图像的颜色失真问题。实验结果表明,所提出的方法具有良好的细节保留能力。

Abstract:

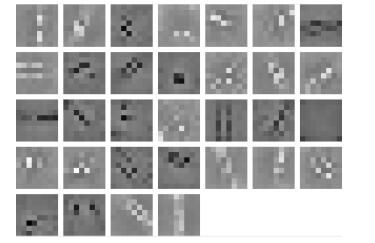

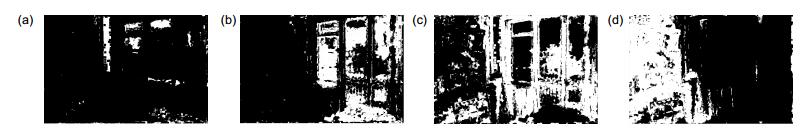

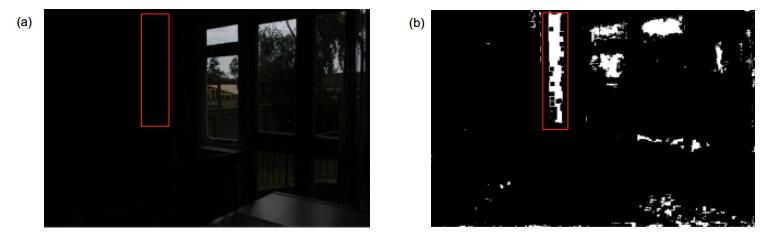

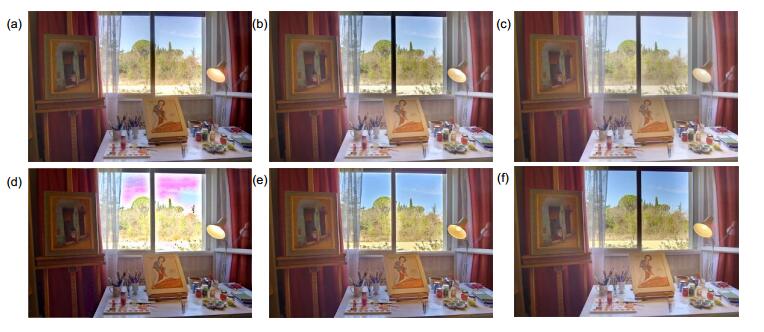

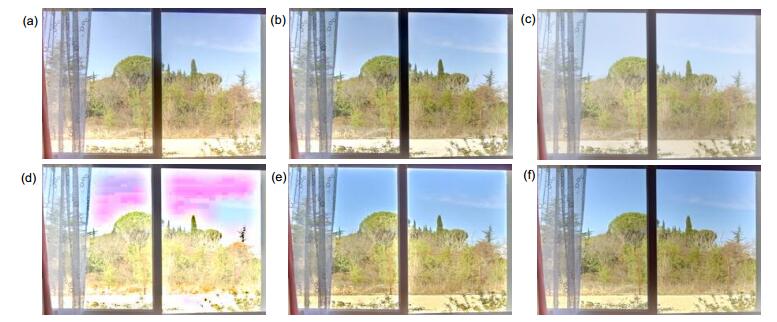

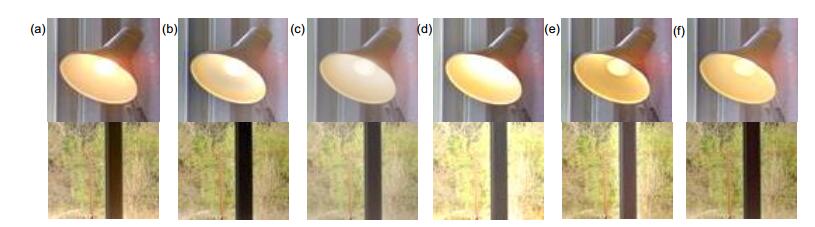

Abstract:In view of the problem about the loss of detail and color distortion in multi-exposure image fusion, this paper proposed a multi-exposure image fusion method based on tensor decomposition and convolution sparse representation. Tensor decomposition, as an approach of low-rank approximation for high-dimensional data, has great potential in feature extraction of multi-exposure images. Convolution sparse representation is a sparse optimization method for the whole image, which can preserve the detail information of the image to the greatest extent. At the same time, in order to avoid color distortion in the fused image, this paper adopted the method of separately fusing luminance and chrominance. Firstly, the core tensor of the source image was obtained by tensor decomposition. Besides, edge features were extracted on the first sub-band which contains the most information. Then the edge feature map was sparsely decomposed to obtain the activity level of each pixel by using L1 norm of the decomposition coefficient. Finally, take "winner-take-all" strategy to generate weight map so as to obtain the fused luminance components. Unlike the process of luminance fusion, chrominance components were fused by simple Gaussian weighting method, which solves the color distortion problem for the fused image to a certain extent. The experimental results show that the proposed method has great detail preservation ability.

-

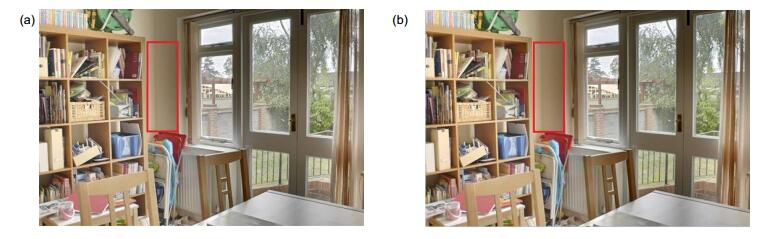

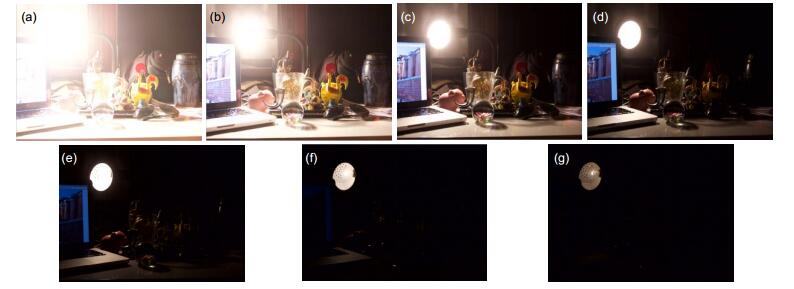

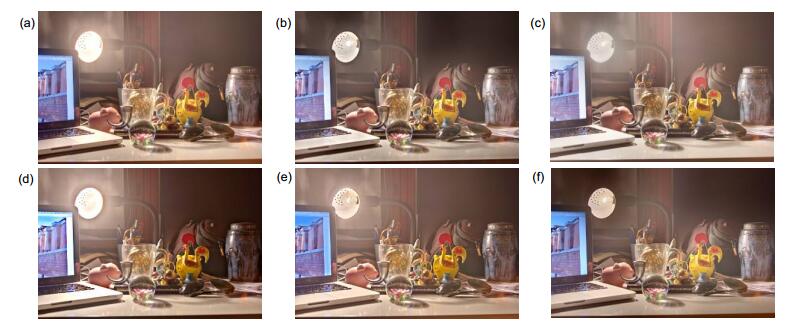

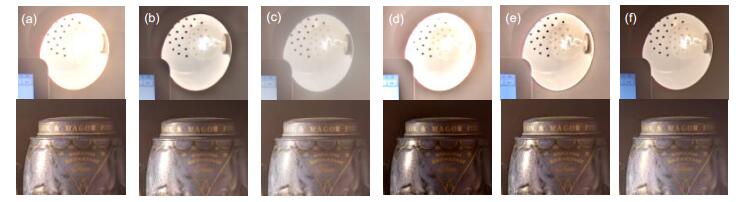

Overview: The real scene usually has a luminance range from 10-5 cd/m2 to 108 cd/m2, but the existing image video devices can capture a limited luminance dynamic range. Thus, it cannot retain all the details of the real scene. In recent years, multi-exposure fusion (MEF), as an effective quality enhancement technology, has gradually become a hot research topic in digital media field. This technique combines multiple low dynamic range (LDR) images with different exposures taken by ordinary cameras to generate an image with rich details and saturated color. At present, many MEF algorithms have been proposed by relevant researchers and they can achieve great results when processing image sequences with simple background. However, when multi-exposure image sequences contain many objects with complex textures, the performance of these algorithms is not satisfactory and the terrible phenomena such as detail loss and color distortion often appear in the fused images. To solve the above problem, this paper proposesd a multi-exposure image fusion method based on tensor decomposition (TD) and convolution sparse representation (CSR). Among them, TD, as a method of low rank approximation for high-dimensional data, has great potential in multi-exposure image feature extraction, while CSR performs sparse optimization on the whole image, which can retain the detail information to the greatest extent. At the same time, in order to avoid color distortion in the fused image, luminance and chrominance were fused separately. Firstly, the core tensor of the source image was obtained through tensor decomposition and the edge feature extraction was carried out on the first sub-band which contains the most information. Secondly, the edge feature map was sparsely decomposed to obtain the activity level of each pixel by using L1 norm of the decomposition coefficient. Finally, take the "winner-take-all" strategy to generate the weight map so as to obtain the fused luminance component. Different from the luminance fusion process, chrominance components were fused by Gaussian weighting method simply according to the color space characteristics. The experiment used two sets of image sequences with complex background. Compared with other five advanced MEF algorithms, the fusion image by the proposed algorithm not only had rich details, but also did not appear the large-scale color distortion. In addition, in order to evaluate the detail preserving ability of the proposed algorithm more comprehensively, seven groups of multi-exposure image sequences were selected for objective measurement. Experimental results show that the proposed method has strong edge information preserving ability.

-

-

表 1 不同算法的QAB/F指标比较

Table 1. Comparison of QAB/F in different algorithms

Sequences Mertens[9] Kang[10] Liu[11] Ma[12] Ma[13] The proposed Room 0.6629 0.6653 0.6573 0.6263 0.6598 0.6721 House 0.6878 0.6962 0.6910 0.5894 0.6780 0.6997 Forth4 0.6462 0.6477 0.6418 0.6258 0.6331 0.6539 Garage 0.6860 0.6864 0.6837 0.6686 0.6785 0.6956 Cafe 0.6755 0.6842 0.6865 0.6721 0.6665 0.6866 Tower 0.7598 0.7699 0.7793 0.7707 0.7689 0.7695 SwissSunset 0.6331 0.6132 0.6028 0.5971 0.6077 0.6279 Average 0.6788 0.6804 0.6775 0.6500 0.6704 0.6865 -

[1] Artusi A, Richter T, Ebrahimi T, et al. High dynamic range imaging technology[J]. IEEE Signal Processing Magazine, 2017, 34(5): 165-172. doi: 10.1109/MSP.2017.2716957

[2] Chiang J C, Kao P H, Chen Y S, et al. High-dynamic-range image generation and coding for multi-exposure multi-view images[J]. Circuits, Systems, and Signal Processing, 2017, 36(7): 2786-2814. doi: 10.1007/s00034-016-0437-x

[3] 都琳, 孙华燕, 王帅, 等.针对动态目标的高动态范围图像融合算法研究[J].光学学报, 2017, 37(4): 101-109. 10.3788/aos201737.0410001

Du L, Sun H Y, Wang S, et al. High dynamic range image fusion algorithm for moving targets[J]. Acta Optica Sinica, 2017, 37(4): 101-109. 10.3788/aos201737.0410001

[4] Li S T, Kang X D, Fang L Y, et al. Pixel-level image fusion: a survey of the state of the art[J]. Information Fusion, 2017, 33: 100-112. doi: 10.1016/j.inffus.2016.05.004

[5] Zhao C H, Guo Y T, Wang Y L. A fast fusion scheme for infrared and visible light images in NSCT domain[J]. Infrared Physics & Technology, 2015, 72: 266-275. 10.1016/j.infrared.2015.07.026

[6] Chen C, Li Y Q, Liu W, et al. Image fusion with local spectral consistency and dynamic gradient sparsity[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 2014: 2760-2765.

[7] Sun J, Zhu H Y, Xu Z B, et al. Poisson image fusion based on Markov random field fusion model[J]. Information Fusion, 2013, 14(3): 241-254. doi: 10.1016/j.inffus.2012.07.003

[8] Liu Y, Liu S P, Wang Z F. A general framework for image fusion based on multi-scale transform and sparse representation[J]. Information Fusion, 2015, 24: 147-164. doi: 10.1016/j.inffus.2014.09.004

[9] Mertens T, Kautz J, van Reeth F. Exposure fusion: a simple and practical alternative to high dynamic range photography[J]. Computer Graphics Forum, 2009, 28(1): 161-171. doi: 10.1111/cgf.2009.28.issue-1

[10] Li S T, Kang X D, Hu J W. Image fusion with guided filtering[J]. IEEE Transactions on Image Processing, 2013, 22(7): 2864-2875. doi: 10.1109/TIP.2013.2244222

[11] Liu Y, Wang Z F. Dense SIFT for ghost-free multi-exposure fusion[J]. Journal of Visual Communication and Image Representation, 2015, 31: 208-224. doi: 10.1016/j.jvcir.2015.06.021

[12] Ma K D, Li H, Yong H W, et al. Robust multi-exposure image fusion: a structural patch decomposition approach[J]. IEEE Transactions on Image Processing, 2017, 26(5): 2519-2532. doi: 10.1109/TIP.2017.2671921

[13] Ma K D, Duanmu Z F, Yeganeh H, et al. Multi-exposure image fusion by optimizing a structural similarity index[J]. IEEE Transactions on Computational Imaging, 2018, 4(1): 60-72. doi: 10.1109/TCI.2017.2786138

[14] Kolda T G, Bader B W. Tensor decompositions and applications[J]. SIAM Review, 2009, 51(3): 455-500. doi: 10.1137/07070111X

[15] Wang H Z, Ahuja N. A tensor approximation approach to dimensionality reduction[J]. International Journal of Computer Vision, 2008, 76(3): 217-229. 10.1007/s11263-007-0053-0

[16] Zeiler M D, Krishnan D, Taylor G W, et al. Deconvolutional networks[C]//Proceedings of 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 2010: 2528-2535.

[17] Chen S S, Donoho D L, Saunders M A. Atomic decomposition by basis pursuit[J]. SIAM Journal on Scientific Computing, 1998, 20(1): 33-61. doi: 10.1137/S1064827596304010

[18] Wohlberg B. Efficient algorithms for convolutional sparse representations[J]. IEEE Transactions on Image Processing, 2016, 25(1): 301-315. doi: 10.1109/TIP.2015.2495260

[19] Liu J L, Garcia-Cardona C, Wohlberg B, et al. Online convolutional dictionary learning[C]//Proceedings of 2017 IEEE International Conference on Image Processing, Beijing, China, 2017.

[20] Liu Y, Chen X, Ward R K, et al. Image fusion with convolutional sparse representation[J]. IEEE Signal Processing Letters, 2016, 23(12): 1882-1886. doi: 10.1109/LSP.2016.2618776

[21] Paul S, Sevcenco I S, Agathoklis P. Multi-exposure and multi-focus image fusion in gradient domain[J]. Journal of Circuits, Systems, and Computers, 2016, 25(10): 1650123. doi: 10.1142/S0218126616501231

[22] Banterle F, Artusi A, Debattista K, et al. Advanced High Dynamic Range Imaging: Theory and Practice[M]. Natick, MA: A K Peters, 2011.

[23] Ma K D. Multi-Exposure Image Fusion by Optimizing A Structural Similarity Index[DB/OL]. https://ece.uwaterloo.ca/~k29ma/dataset/MEFOpt_Database, 2018.

[24] Xydeas C S, Petrovic V. Objective image fusion performance measure[J]. Electronics Letters, 2000, 36(4): 308-309. doi: 10.1049/el:20000267

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: