Soft multilabel learning and deep feature fusion for unsupervised person re-identification

-

摘要:

跨摄像头场景中依赖面向标签映射关系的学习以提高识别精度,有监督行人重识别模型虽然识别精度较好,但存在可扩展问题,诸如算法识别精度严重依赖有效的监督信息,算法实时性差等;针对上述问题,提出一种基于软多标签的无监督行人重识别算法。为了提高标签匹配精度,首先利用软多标签逼近真实标签,通过计算参考数据集和参考代理在软多标签函数中的损失函数,预训练参考数据集,并构建预训练与训练结果的映射模型。再通过生成数据和真实数据分布的最小距离的期望即简化的2-Wasserstein距离计算相机视图中软多标签均值和标准差得到损失函数,解决跨视域标签一致性问题。为了提高软多标签对未标记目标数据集的有效性,计算联合嵌入损失,挖掘不同类别间的相似对,纠正跨域分布错位。针对残差网络训练时长和无监督学习精度低的问题,通过结合压缩激励网络(SENet)和多层级深度特征融合改进残差网络的结构,提高训练速度和精度。实验结果表明,该方法在标准数据集下的首位命中率和平均精度均值优于先进相关算法。

Abstract:

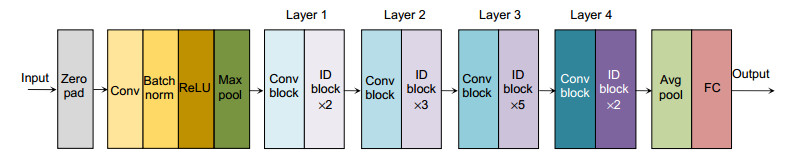

Abstract:In cross-camera scenarios, it relies on the learning of label mapping relationships to improve recognition accuracy. The supervised person re-identification model has better recognition accuracy, but there are scalability problems. For example, the accuracy of algorithm identification relies heavily on effective supervised information. When adding a small amount of data in the classification process, all data needs to be reprocessed, resulting in poor real-time performance. Aiming at the above problems, an unsupervised person re-identification algorithm based on soft label is proposed. In order to improve the accuracy of label matching, first, learn soft multilabel to make it close to the real label, and obtain the reference agent by calculating the loss function of the reference data set to achieve the purpose of pre-training the reference data set. Then, calculate the expected value of the minimum distance between the generated data and the real data distribution (using the simplified 2-Wasserstein distance), calculate the mean and standard deviation vector of the soft multilabel in the camera view, and the resulting loss function can solve cross-view domain label consistency issues. In order to improve the validity of the soft tag on the unmarked target data set, the joint embedding loss is calculated, the similar pairs between different categories are mined, and the cross-domain distribution misalignment is corrected. In view of the problem that the residual network training duration and the unsupervised learning accuracy are low, the structure of the residual network is improved by combining the SENet and fusing multi-level depth feature to improve the training speed and accuracy. The experimental results show that the rank-1 and mAP are better than advanced correlation algorithms.

-

Key words:

- resnet /

- person re-identification /

- soft multilabel /

- unsupervised /

- depth feature

-

Overview: People re-identification is mainly used to retrieve pedestrians of interest in the images taken by the camera, and then retrieve targets similar to the people's image. This technology can save a lot of time and manpower in finding the images of the suspect in the pedestrian database, and has good application prospects in intelligent security, criminal investigation, and image retrieval. The supervised person re-identification model has better recognition accuracy, but there are scalability problems. For example, the accuracy of algorithm identification relies heavily on effective supervised information. When adding a small amount of data in the classification process, all data needs to be reprocessed, resulting in poor real-time performance. Aiming at the above problems, an unsupervised person re-identification algorithm based on soft multilabel is proposed. By learning the feature of the target, and then comparing it with the labeled reference datasets, each unlabeled target gets a soft multilabel. In this learning process, in order to obtain more accurate soft multilabel, we introduce the concept of reference agents and in order to reduce the difference between reference agents and labeled reference datasets, we pre-trained the reference datasets. Using a reference agent instead of a labeled reference dataset to compare with an unlabeled target. We also use three loss functions, which are used to mine hard negative pair information, make the cross-camera labels of the same target consistent, and correct cross-domain distribution misalignment. In these three loss functions, the purpose of mining hard negative pair information is to determine negative pairs more accurately and push the distance of negative pairs farther away; The cross-camera label consistency is to reduce the gap between multilabel for the same target under different camera distributions. Using the simplified 2-Wasserstein distance, the mean and standard deviation vectors of soft multilabel in different camera views are calculated; In order to further improve the effectiveness of the reference agent and solve the problem of cross-domain distribution misalignment, for each reference agent, find unlabeled people close to it and design a loss function. In the process of feature extraction, we use multi-level deep feature fusion to complement deep features with shallow features to achieve the purpose of improving feature robustness and thereby improving the recognition accuracy. We also tried to integrate squeeze-and-excitation networks (SENet) into the residual network to achieve a function similar to the attention mechanism to improve the learning speed. Experimental results show that rank-1 and mAP in this paper are superior to advanced correlation algorithms.

-

-

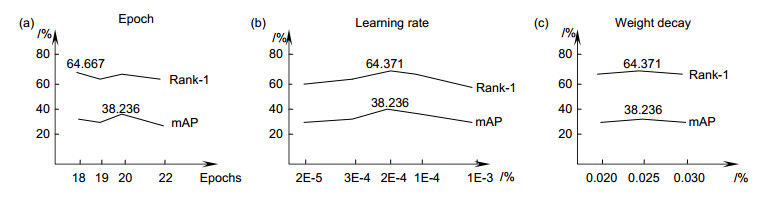

图 6 对 SE_ResNet-50 进行调参实验结果。 (a) 调纪元数 epoch 参数实验结果曲线图; (b) 调学习率 learning rate 实验结果曲线图; (c) 调权重衰减 weight decay 实验结果曲线图

Figure 6. Results of adjusting hyperparameters for SE_ResNet-50. (a) Adjusting the experimental results of the epoch parameters; (b) Adjusting the learning rate experimental results; (c) Adjusting the weight decay experimental results

表 1 预训练对训练结果的影响

Table 1. The effect of pre-trained on training results

Train Pre-train Market R1 R5 R10 mAP ResNet50 ResNet50(loss_total=0.622) 66.627 81.977 86.609 39.361 ResNet50 ResNet50, imageNet=None 61.401 77.316 82.245 33.930 ResNet50 None 42.548 60.778 69.032 22.139 SE_ResNet50 SE_ResNet50 51.989 69.893 77.078 28.330 SE_ResNet50 ResNet50(loss_total=0.622) 64.371 81.621 86.461 38.236 SE_ResNet50 None 35.362 51.425 58.462 17.403 表 2 特征融合实验结果

Table 2. Feature fusion experimental results

Methods Market R1 R5 R10 mAP ResNet50+layer1+layer3 52.049 69.567 76.395 28.279 ResNet50+layer1+layer4 66.330 81.473 86.520 39.581 ResNet50+layer2+layer3 51.306 69.240 76.306 28.113 ResNet50+layer2+layer4 62.500 77.316 83.254 35.884 ResNet50+layer3+layer4 67.102 81.977 86.876 40.036 ResNet50+layer1+layer3+layer4 68.973 82.601 86.995 41.188 表 3 消融实验

Table 3. Ablation study

Methods Market-1501 DukeMTMC-reID R1 R5 R10 mAP R1 R5 R10 mAP w/o LCML 60.0 75.9 81.9 34.6 63.2 77.2 82.5 44.9 w/o LRAL 59.2 76.4 82.3 30.8 57.9 72.6 77.8 37.1 w/o LCML & LRAL 53.9 71.5 77.7 28.2 60.1 73.0 78.4 40.4 ResNet50+LMAR 66.627 81.977 86.609 39.361 67.1 79.8 84.2 48.0 表 4 与相关方法无监督行人重识别精度对比

Table 4. Comparison of unsupervised person recognition accuracy with related methods

Methods Reference Market Duke R1 R5 mAP R1 R5 mAP CAMEL[5] ICCV’17 54.5 73.1 26.3 40.3 57.6 19.8 PUL[6] ToMM’18 45.5 60.7 20.5 30.0 43.4 16.4 TJ-AIDL[7] CVPR’18 58.2 74.8 26.5 44.3 59.6 23.0 PTGAN[8] CVPR’18 38.6 57.3 15.7 27.4 43.6 13.5 SPGAN[9] CVPR’18 51.5 70.1 27.1 41.1 56.6 22.3 HHL[10] ECCV’18 62.2 78.8 31.4 46.9 61.0 27.2 MMFA[22] BMVC’18 45.3 - 24.7 56.7 - 27.4 DECAMEL[23] SCI’18 60.24 - 32.44 - - - ARN[24] CVPRW’19 70.3 80.4 39.4 60.2 73.9 33.4 BUC[25] AAAI’19 66.2 79.6 38.3 47.4 62.6 27.5 MAR[11] CVPR’19 67.7 81.9 40.0 67.1 79.8 48.0 The proposed method This work 68.97 82.6 41.2 68.6 80.6 50.1 -

[1] Xiong F, Xiao Y, Cao Z G, et al. Good practices on building effective CNN baseline model for person re-identification[J]. Proceedings of SPIE, 2019, 11069: 110690I.

[2] Wang S Q, Xu X, Liu L, et al. Multi-level feature fusion model-based real-time person re-identification for forensics[J]. Journal of Real-Time Image Processing, 2020, 17(1): 73-81. doi: 10.1007/s11554-019-00908-4

[3] Bak S, Carr P, Lalonde J F. Domain adaptation through synthesis for unsupervised person re-identification[J]. ECCV, 2018: 189-205. http://link.springer.com/chapter/10.1007/978-3-030-01261-8_12

[4] Ye M, Li J W, Ma A J, et al. Dynamic graph co-matching for unsupervised video-based person re-identification[J]. IEEE Transactions on Image Processing, 2019, 28(6): 2976-2990. doi: 10.1109/TIP.2019.2893066

[5] Yu H X, Wu A C, Zheng W S. Cross-view asymmetric metric learning for unsupervised person re-identification[C]// Proceedings of 2017 IEEE International Conference on Computer Vision, Venice, 2017: 994-1002.

[6] Fan H H, Zheng L, Yan C G, et al. Unsupervised person re-identification: clustering and fine-tuning[J]. ACM Transactions on Multimedia Computing, Communications, and Applications, 2018, 14(4): 83. http://arxiv.org/abs/1705.10444

[7] Wang J Y, Zhu X T, Gong S G, et al. Transferable joint attribute-identity deep learning for unsupervised person re-identification[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 2275-2284.

[8] Wei L G, Zhang S l, Gao W, et al. Person transfer GAN to bridge domain gap for person re-identification[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 79-88.

[9] Deng W J, Zheng L, Ye Q X, et al. Image-image domain adaptation with preserved self-similarity and domain-dissimilarity for person re-identification[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 994-1003.

[10] Zhong Z, Zheng L, Li S Z, et al. Generalizing a person retrieval model hetero-and homogeneously[C]//Proceedings of the European Conference on Computer Vision, Glasgow, 2018: 172-188.

[11] Yu H X, Zheng W S, Wu A C, et al. Unsupervised person re-identification by soft multilabel learning[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, 2019: 2148-2157.

[12] He R, Wu X, Sun Z N, et al. Wasserstein CNN: learning invariant features for NIR-VIS face recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 41(7): 1761-1773. http://ieeexplore.ieee.org/document/8370677/

[13] Wang F, Xiang X, Cheng J, et al. NormFace: L2 hypersphere embedding for face verification[C]//Proceedings of the 25th ACM International Conference on Multimedia, California, Mountain View, 2017: 1041-1049.

[14] Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 7132-7141.

[15] Wang C, Zhang Q, Huang C, et al. Mancs: a multi-task attentional network with curriculum sampling for person re-identification[C]//Proceedings of the 15th European Conference on Computer Vision, Munich, 2018: 365-381.

[16] Fan H, Zheng L, Yan C, et al. Unsupervised Person Re-identification by Deep Learning Tracklet Association[J]. Acm Transactions on Multimedia Computing Communications & Applications, 2018, 14(4): 1-18. http://arxiv.org/abs/1809.02874

[17] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, 2016: 770-778.

[18] Wang Y, Wang L Q, You Y R, et al. Resource aware person re-identification across multiple resolutions[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 8042-8051.

[19] Hu Y, Wen G H, Luo M N, et al. Competitive inner-imaging squeeze and excitation for residual network[Z]. arXiv: 1807.08920[cs: CV], 2018.

[20] Zheng L, Shen L Y, Tian L, et al. Scalable person re-identification: a benchmark[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, Santiago, 2015: 1116-1124.

[21] Zheng Z D, Zheng L, Yang Y. Unlabeled samples generated by GAN improve the person re-identification baseline in vitro[C]//Proceedings of 2017 IEEE International Conference on Computer Vision, Venice, 2017: 3754-3762.

[22] Lin S, Li H L, Li C T, et al. Multi-task mid-level feature alignment network for unsupervised cross-dataset person re-identification[Z]. arXiv: 1807.01440[cs: CV], 2018.

[23] Yu H X, Wu A C, Zheng W S. Unsupervised person re-identification by deep asymmetric metric embedding[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 42(4): 956-973.

[24] Li Y J, Yang F E, Liu Y C, et al. Adaptation and re-identification network: an unsupervised deep transfer learning approach to person re-identification[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, 2018: 172-178.

[25] Lin Y T, Dong X Y, Zheng L, et al. A bottom-up clustering approach to unsupervised person re-identification[C]// Proceedings of the 33rd AAAI Conference on Artificial Intelligence, 2019: 8738-8745.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: